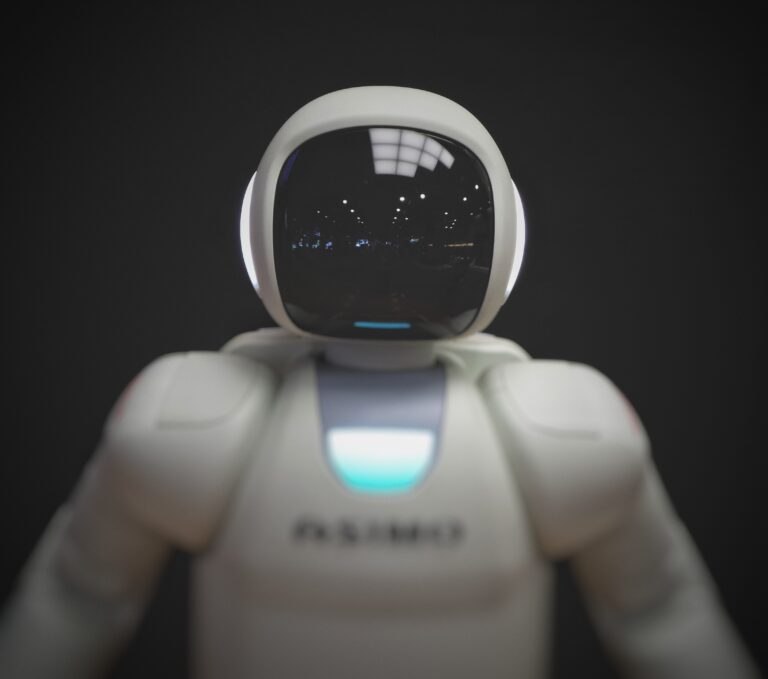

As the development of artificial intelligence (AI) continues to advance at an exponential rate, concerns are also growing regarding the potential dangers this technology could pose.

Whether it is the automation of some jobs, algorithms with gender and racial biases, or autonomous weapons that operate without human supervision (to name just a few), danger is present on several fronts.

Not to mention that as of today, artificial intelligences are at their embryonic stage. Let’s take a look at what there is to fear, and how we can curb any damage these kinds of technologies might cause.

Table of Contents

The possible dangers connected to Artificial Intelligence

The following are the possible dangers connected to the development and spread of artificial intelligence:

Loss of jobs due to task automation

The introduction of artificial intelligence into the world of work and production activities has paved the way for a lively debate on the possible impact on employment.

In particular, the main concern is about the reduction of jobs due to task automation. Indeed, AI is capable of performing many tasks that were previously performed by humans.

In such a landscape, scenarios arise in which AI could result in a significant reduction in the number of jobs available to humans.

Importantly, however, AI can also create new job opportunities. The adoption of AI could also lead to the creation of new markets, new business opportunities and new professions. However, in order to take full advantage of the potential of AI and minimize negative consequences, it is necessary to act prudently and develop appropriate strategies to deal with the changes taking place.

Privacy violations, the risks with artificial intelligence

The use of artificial intelligence (AI) also carries concrete privacy risks. AI is able to learn and improve its performance by processing large amounts of data. However, this data may contain personal and sensitive information.

Should this information be misused or accessed by unauthorized individuals, this could lead to privacy violations and negative consequences for the individuals involved. To mitigate this risk, organizations using AI must take appropriate measures.

They could include anonymizing the data, which is the removal of any information that could identify the individual. Encrypting the data, which is the conversion of the data into a format unreadable to those who do not have a decryption key; and limiting access to the data to only those who need it to perform their activities.

Privacy breaches not only pose a threat to the individuals involved. But can also damage the reputation and credibility of the organizations handling the data.

Deepfakes, the threat of manipulation through AI

Deepfakes constitute another threat associated with the use of AI. These are video or audio content that is manipulated through the use of AI. Thus, with the goal of creating content that looks authentic, but is actually fake-as in the case of Fakeyou.

This could spread misinformation or damage the reputation of people or organizations. Deepfakes pose a particularly insidious threat because they can be extremely difficult to detect.

However, some methods are in development to detect deepfakes. It might be useful to use natural language processing (NLP) techniques to check the consistency between the content of the video and the information available online.

In addition, machine learning algorithms could detect flaws in deepfakes and reliably identify them.

Algorithm bias caused by corrupted data

Artificial intelligence (AI) learns from the information available. And therefore it is possible for AI algorithms to be biased by corrupted data.

This can lead to discrimination against certain groups of people or the dissemination of incorrect information.

To mitigate this risk, measures should be taken to ensure that the data used for AI are accurate and not influenced by unconscious bias. These measures could include critical analysis of historical data Also, the use of representative and diverse data. And thridly, the adoption of control mechanisms to verify data quality.

Socioeconomic inequities, are they likely to increase due to AI?

The use of artificial intelligence (AI) leads also to an increase in socioeconomic inequity, if not managed appropriately.

If AI is used to improve the efficiency of companies, this could lead to a reduction in costs and thus an increase in profits. But not necessarily an increase in workers’ wages. Therefore, it is essential to take steps to ensure that AI is used fairly. And that the benefits of using AI are distributed equitably.

This could include adopting policies that promote ongoing training and retraining of workers, creating AI jobs, and promoting initiatives that improve access to education and job opportunities.

In addition, it is crucial to pay attention to the inequality that exists in access to AI. Indeed, if AI is used primarily by large companies and organizations with financial resources, this leads to a further increase in inequality.

To counter this risk, it may be necessary to promote policies that encourage access to AI by all organizations. Thus regardless of their size and degree of development.

Market volatility

The use of artificial intelligence (AI) could also lead to increased market volatility. For example, with AI it could be possible to conduct high-frequency financial transactions, which could lead to sudden and unexpected price fluctuations.

In addition, with AI could be possible to develop automated trading strategies. Which could lead to greater efficiency but also greater volatility.

It is therefore important that regulators develop appropriate policies to mitigate the risk of market volatility arising from the use of AI. This could include adopting rules to limit the speed of financial transactions, to prevent market manipulation, and to ensure transaction transparency.

In addition, there is a need to promote collaboration between market participants and AI developers to develop innovative solutions that can reduce the risks arising from the use of AI.

For example, it could be useful to develop AI algorithms that can predict price fluctuations. And prevent the negative consequences of high-frequency financial transactions.

Weapons automation

Finally, the use of artificial intelligence (AI) in the field of weapons could lead to serious consequences for international security.

Weapons automation could lead to increased weapon lethality and further distance between combatants and their victims. It is therefore essential that the international community develop appropriate policies to regulate the use of AI in weapons and prevent possible negative consequences.

Some international organizations are already starting to discuss these issues and develop rules for the use of autonomous weapons. But, further efforts are necessary to ensure that AI is safe in the military field.

In addition, it is important to promote collaboration among countries to develop uniform international rules for the use of AI in weapons. And prevent possible disputes and conflicts arising from the misuse of AI in the military.

Read also: Google launches Discovery AI, the artificial intelligence for retail